Introduction

In the previous post, we delved into the fundamentals of Kubernetes (k8s) components, such as Clusters, Nodes, Objects, and Controllers. Now, we’re shifting our focus to a critical aspect of Kubernetes: the service mesh. Among the various service mesh options, Istio, backed by industry giants like Google, IBM, and Lyft, has gained significant popularity.

In this article, we’ll explore the importance of service mesh in Kubernetes and its key features, providing you with valuable insights into this technology.

Background of the Emergence of Service Mesh

A service mesh is a dedicated infrastructure layer designed to manage and control communication between microservices in a Kubernetes environment. It emerged as a response to the complexities and challenges associated with managing large-scale, dynamic, and distributed systems. The role of service mesh in Kubernetes is to facilitate seamless inter-service communication and provide essential features like traffic control, resiliency, and observability.

To better understand the relationship between Kubernetes components and service mesh, let’s use another non-technical analogy: consider a bustling city with various districts, each with its unique characteristics and activities.

Kubernetes components are like these districts, while the service mesh serves as the network of roads and transportation systems connecting them. Just as an efficient transportation system ensures smooth movement between districts, a service mesh guarantees effective communication between Kubernetes components.

Service mesh addresses several challenges in Kubernetes native and microservices architecture, such as load balancing, traffic routing, security, and monitoring. These problems can hinder the performance and resilience of applications running on Kubernetes, making it difficult to maintain and scale them. By simplifying these tasks, the service mesh significantly improves the overall performance and resilience of applications running on Kubernetes.

In conclusion, the service mesh plays a pivotal role in Kubernetes by ensuring seamless communication between microservices and providing vital features to support application performance and resilience.

Key features of Service Mesh

In order to easily understand the characteristics of service mesh, Let’s consider a specific example to illustrate the key features provided by a service mesh: an e-commerce platform with multiple microservices, including user authentication, product catalog, shopping cart, and payment processing. In this scenario, service mesh helps manage communication between these microservices effectively, ensuring a smooth user experience.

- Traffic Control: The service mesh enables efficient traffic routing and load balancing between microservices. For instance, during peak times, it can redirect traffic to less congested services or distribute requests evenly to prevent overloading.

- Resiliency: In our e-commerce platform example, service mesh provides fault-tolerant mechanisms to handle unexpected issues. If the payment processing service encounters an error, the service mesh ensures that the user’s shopping cart data is preserved and retries the transaction when the service is back online.

- Fault Tolerance Management: Service mesh can detect and isolate failures within microservices, preventing them from affecting the entire platform. If the product catalog service goes down, the mesh can route users to a cached version of the catalog to maintain a seamless experience.

- Security: The service mesh enhances the security of our e-commerce platform by encrypting communication between microservices and managing access control. This prevents unauthorized access to sensitive data, such as user credentials or payment information.

- Observability: Service mesh provides detailed monitoring and logging capabilities, making it easier to track the performance of our e-commerce platform. It helps identify bottlenecks, optimize resource usage, and troubleshoot issues quickly.

By using the example of an e-commerce platform, we could see how a service mesh offers essential features that support the efficient functioning and maintenance of applications running on Kubernetes.

Differences from Kubernetes Native system

As we all know, Kubernetes is a powerful container orchestration system that provides a robust platform for deploying and managing containerized applications. However, as the number of applications and services running on a Kubernetes cluster increases, managing traffic, ensuring reliability, and maintaining security becomes increasingly complex. This is where service mesh comes in.

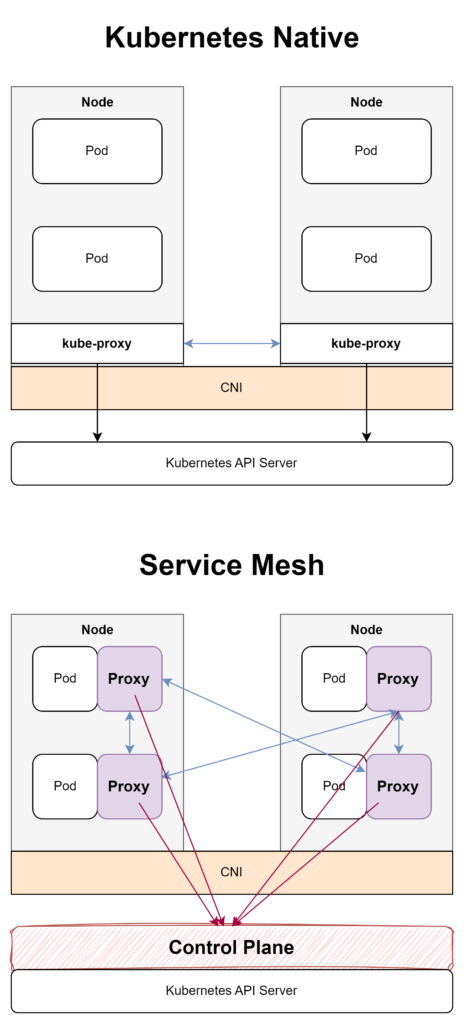

Service mesh is a layer of infrastructure that sits between the application code and the network. It provides a set of features that help to manage and monitor traffic between services, improve reliability, and enhance security. In this section, we’ll take a closer look at the key differences between Kubernetes native system and Kubernetes with service mesh.

Control Plane and Sidecar Injection

One of the key differences between Kubernetes native system and Kubernetes with service mesh is the control plane. In a native Kubernetes deployment, the Kubernetes API server is responsible for controlling and managing the cluster. In a Kubernetes deployment with service mesh, the Istio control plane takes over many of these responsibilities. This includes things like service discovery, traffic management, and security policy enforcement.

Another key difference between Kubernetes native system and Kubernetes with service mesh is the use of sidecar injection. In a Kubernetes deployment with service mesh, each pod includes a sidecar container that is responsible for intercepting and managing network traffic. This sidecar container is injected into the pod at runtime using Kubernetes admission controllers.

By using sidecar injection, service mesh can provide a number of powerful features that are difficult to achieve with native Kubernetes. For example, sidecar containers can be used to manage traffic routing, provide service discovery, and enforce security policies.

Proxy Network

Even though some proxies in k8s provide some basic support for Layer 7 load balancing using ingress controllers, Traditional proxies mostly operate at Layer 4, based on TCP/IP. However, service mesh proxies are configured at Layer 7 to accommodate a broader set of requirements, including HTTP URL-based routing.

Envoy is a notable sidecar proxy example, created with the design goal of making the network transparent to applications while ensuring easy identification of problem sources when issues arise in services. Envoy offers the following features:

- L3/L4 Proxy: Supports TCP/UDP proxy and TLS authentication.

- L7 Proxy: Enables routing based on buffering, rate limiting, path/content type/runtime values, and more.

- HTTP/2: Supports both HTTP/1.1 and HTTP/2 protocols.

- Service Discovery: Dynamically collects service, cluster, HTTP routing, and listener socket information to discover services and perform health checks.

- Advanced Load Balancing: Minimizes the impact of failures through automatic retries, anomaly detection, and circuit breaking in case of service failures.

Proxies like Envoy provide features that are challenging to implement using basic load balancers offered by cloud services or other network functions. These proxies enable more fine-grained control over service operations.

Overall, the use of service mesh in Kubernetes deployments can help to simplify the management of complex microservices architectures, improve reliability, and enhance security. However, it’s important to understand the key differences between Kubernetes native system and Kubernetes with service mesh in order to make an informed decision about which approach is right for your organization.

What’s different from API Gateway?

Reading this far, some people may feel that API gateway and service mesh are similar. Although they share a few similarities, it’s essential to understand their distinct characteristics and roles within a system.

API Gateway primarily focuses on managing and routing API calls for both internal and external services, as well as database access. In contrast, service mesh is designed to enhance portability, communication, and resiliency among internal enterprise systems and microservices.

Other One significant difference lies in their scope of operation. API Gateway supports routing for external applications connecting to a company’s services, while service mesh operates solely within the scope of internal enterprise services.

In terms of digital transformation and security, API Gateway and service mesh serve different purposes. An API Gateway, when used with a service mesh, can accelerate time-to-market and ensure security. Conversely, a service mesh focuses on managing microservices to expedite delivery time, though security issues may arise due to its distributed nature.

Finally, the routing and load balancing mechanisms also differ. API Gateway utilizes an independent gateway component, providing a single endpoint for incoming requests and handling load balancing internally. In contrast, service mesh employs a sidecar within the service as part of the local network stack, with load balancing performed through algorithms and a service registry.

Other differences are summarized in the table below.

| API Gateway | Service Mesh | |

| Purpose | Designed to route API calls for internal/external and even database access | Designed to improve portability within internal enterprise systems and microservices |

| Functionality | Supports routing for external applications to connect with the company | Operates within the scope of internal enterprise services |

| API Role | API Gateway is used to manage and protect APIs Digital Transformation: When used with service mesh, it reduces time-to-market and ensures security | API is used to protect service mesh according to scale |

| Digital Transformation | When used with service mesh, it reduces time-to-market and ensures security | Manages microservices to reduce delivery time, but security issues may arise |

| Complexity | Easily manage endpoints and scale APIs to manage service mesh | As endpoints expand with business needs, complexity increases |

| Technical Maturity | Mature technology | Emerging technology (relatviely) |

| Security | Automated security policies and features | Security policies applied through manual processes |

| Routing Entity | Server | Requesting service |

| Routing Component | Independent API gateway component introducing a separate network | Sidecar within the service, becoming part of the local network stack |

| Load Balancing | Provides a single endpoint, redirects requests to components responsible for load balancing within the API Gateway, which then handle the requests | Receives service list from Service Registry, sidecar performs load balancing through algorithms |

| Network | Located between the external internet and the internal service network | Positioned between internal service networks, allowing communication only within the application network boundary |

| Analytics | All calls to APIs by users and providers are collected and analyzed | Analysis is possible for all microservice components within the mesh |

By understanding these differences, you can make informed decisions on the most suitable approach for your specific use case and requirements.

3 Best Practices for Service Mesh

While introducing a service mesh can offer numerous benefits, it’s crucial to remember that it’s not always the best solution for every situation. Improper use of a service mesh could lead to more complex services and unnecessary overhead, making your application harder to maintain and troubleshoot. Therefore, it is essential to know exactly when to use a service mesh and follow the best practices to ensure its effectiveness.

In the next section, we will discuss three best practices for implementing a service mesh in your Kubernetes environment, helping you make the most of this powerful technology.

Determine the Right Use Case

Before implementing a service mesh, carefully evaluate your application’s requirements and architecture. Service mesh is best suited for complex, large-scale applications with multiple microservices that need advanced traffic management, security, and observability features. Avoid using a service mesh for small-scale applications with minimal inter-service communication, as it might introduce unnecessary overhead and complexity.

Gradual Adoption and Incremental Rollout

When introducing a service mesh, start with a small, non-critical portion of your application to test and validate its benefits. Gradually adopt the service mesh across other microservices, allowing your team to gain experience and understanding of the technology. This incremental rollout approach minimizes potential disruption and reduces the risk of unforeseen issues.

Monitor and Optimize Performance

Service mesh provides powerful observability features to monitor and analyze the performance of your application. Leverage these capabilities to identify bottlenecks, optimize resource usage, and ensure the overall health of your application. Continuously monitor performance metrics and logs to detect and troubleshoot issues early, preventing potential downtime or degraded user experiences.

Conclusion

In conclusion, a service mesh can significantly enhance the capabilities of Kubernetes-based applications by providing advanced traffic control, resiliency, fault tolerance, security, and observability features. However, it’s essential to understand the differences between a native Kubernetes system and one with a service mesh, as well as when to use this powerful technology.

Stay up-to-date with the latest developments in service mesh technology and continue to learn from the experiences of others in the community to maximize the benefits and minimize potential challenges. With the right approach, a service mesh can be an invaluable addition to your Kubernetes ecosystem.