Introduction

In this piece, we’re going to explore a number of programming terms, using the Go programming language (also known as Golang) to illustrate these concepts. I have experience with Golang, but recently, my focus has shifted to infrastructure management, so I’ve been away from hands-on programming for a while. This article serves as both a reminder and a summary of my prior knowledge.

Concurrent and Parallel

Concurrent

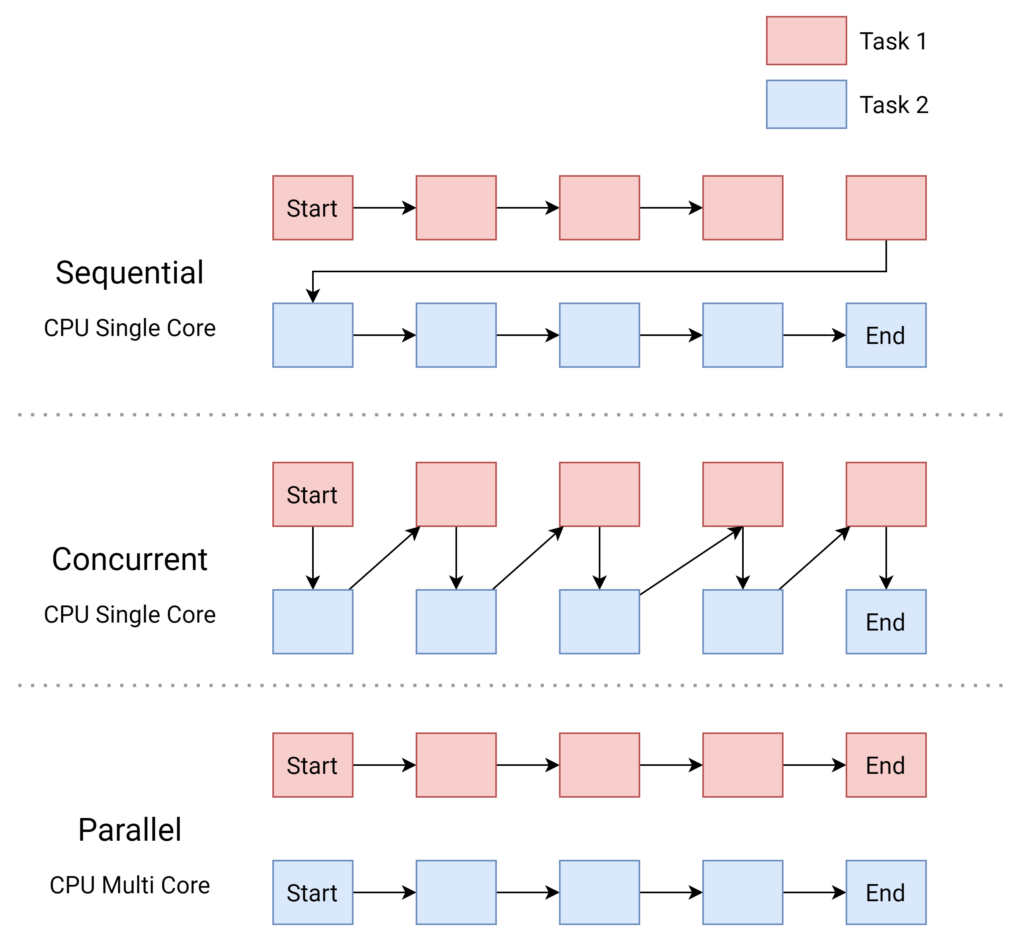

At the core, concurrency is the concept where several tasks appear to run simultaneously, but they may not necessarily be executing at the exact same moment. In concurrent programming, multiple tasks are making progress, and the exact order in which they execute is not predetermined. They may take turns using the CPU resources, or switch between tasks at intervals, providing the illusion of parallelism.

package main

import (

"fmt"

"time"

)

func printNumbers() {

for i := 0; i < 5; i++ {

time.Sleep(1 * time.Millisecond)

fmt.Printf("%d ", i)

}

}

func printLetters() {

for i := 'A'; i < 'A'+5; i++ {

time.Sleep(1 * time.Millisecond)

fmt.Printf("%c ", i)

}

}

func main() {

go printNumbers()

go printLetters()

time.Sleep(100 * time.Millisecond)

}

Output:

A 0 1 B C 2 3 D E 4

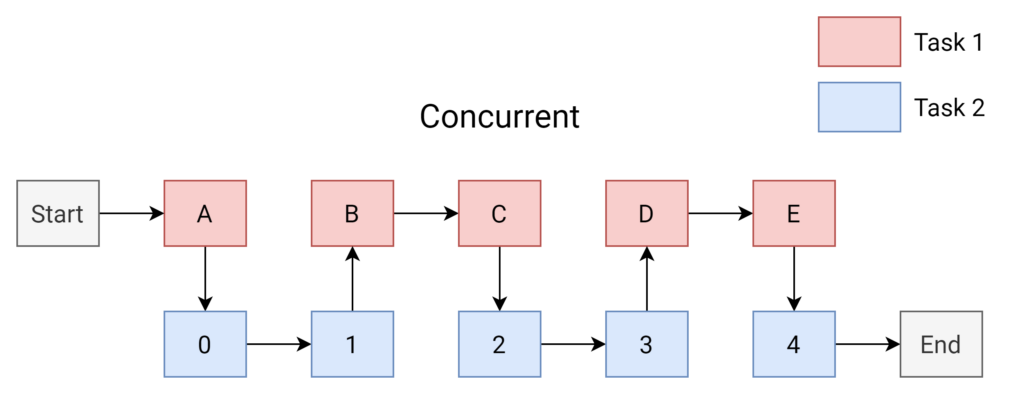

In the above Go code, we have two functions: printNumbers() and printLetters(). These functions are each started in their own goroutines with the go keyword in the main function. This means that both functions are able to run concurrently.

The Go runtime is able to manage these goroutines and allow them to run in a way that makes it seem like they’re running at the same time. As you can see the following diagram, In reality, they’re taking turns using the CPU.

Because these functions are concurrent, the exact order of the output can vary each time you run the program. The only guarantee we have is that each function will print its numbers or letters in the order defined within the function itself.

This output is a great demonstration of concurrency, as both the printing of numbers and letters is making progress, but not necessarily in a strictly alternating or predictable pattern.

Parallel

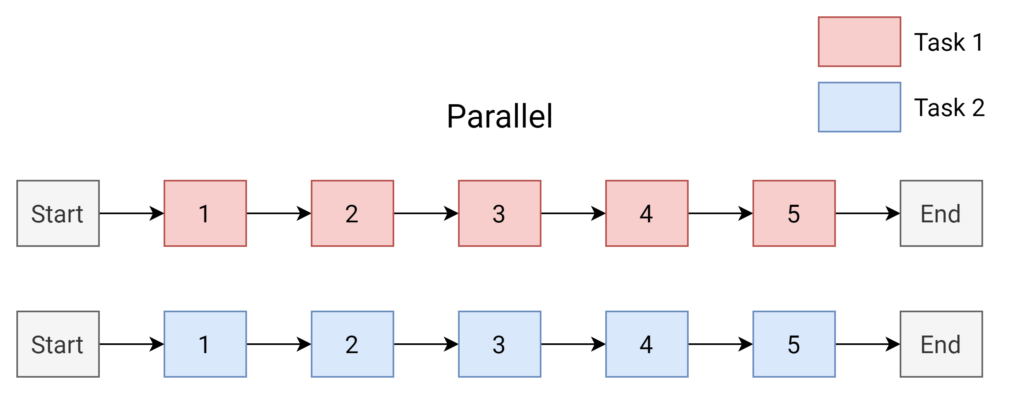

While concurrent execution is about dealing with multiple tasks at once, parallelism is about executing multiple tasks at exactly the same time. Parallel programming leverages the power of multiple CPU cores available in today’s modern computers, allowing us to perform more than one operation simultaneously.

Here’s an example of how we can achieve parallel execution in Go:

package main

import (

"fmt"

"sync"

"time"

)

func task(id int, wg *sync.WaitGroup) {

defer wg.Done()

for i := 1; i <= 5; i++ {

fmt.Printf("Task %d: Step %d\n", id, i)

time.Sleep(time.Millisecond * 500)

}

}

func main() {

var wg sync.WaitGroup

wg.Add(2) // Adding two tasks to WaitGroup

go task(1, &wg) // Start task 1

go task(2, &wg) // Start task 2

wg.Wait() // Wait for all tasks to finish

}

Output:

Task 2: Step 1 Task 1: Step 1 Task 1: Step 2 Task 2: Step 2 Task 2: Step 3 Task 1: Step 3 Task 1: Step 4 Task 2: Step 4 Task 2: Step 5 Task 1: Step 5

The above Go code introduces the concept of a WaitGroup from the sync package. A WaitGroup is a means of waiting for a collection of goroutines to finish executing. In our case, we’re waiting for two tasks (each being a separate goroutine) to finish executing before we allow our program to exit.

We initiate these two tasks concurrently, but because we are leveraging the capabilities of a multi-core processor, these tasks can be executing their steps in parallel.

As a result, we can see from the output that the steps from Task 1 and Task 2 can interleave with each other. This is a clear demonstration of parallelism where multiple tasks are processed simultaneously.

Synchronous and Blocking

| Synchronous Blocking | Asynchronous Blocking | |

|---|---|---|

| Execution Context | The operation is performed in the same execution context (often a single thread). | The operation is handed off to a different execution context (like another thread or process). |

| Blocking | The main execution context (like a thread) is halted – it “blocks” – until a particular operation completes. | The original context is free to do other things in the meantime, but in the case of “asynchronous blocking,” it specifically chooses to wait until the operation is complete. |

| Use Case | Can be simpler and easier to understand and debug, good for tasks that need to be performed in order and each task depends on the output of the previous one. | Can achieve better efficiency and throughput for I/O-bound or network-bound tasks. Keeps the system responsive in user interfaces or server handling multiple clients. |

| Concurrency Management | Usually simpler since there’s no need to manage separate threads or deal with synchronization issues. | Requires careful management of concurrent tasks and synchronization, but Go’s goroutines and channels provide high-level abstractions for this. |

Let’s start by demonstrating both through a simple Go program:

- Synchronous Blocking

package main

import (

"fmt"

"time"

)

func syncWork() {

time.Sleep(2 * time.Second) // Simulate work that takes time

fmt.Println("SyncWitBlocking Work Done")

}

func SyncWitBlocking() {

fmt.Println("SyncWitBlocking Starting Work")

syncWork() // This is a blocking call

fmt.Println("SyncWitBlocking End of Program")

}

func main() {

SyncWitBlocking()

}

Output:

SyncWitBlocking Starting Work SyncWitBlocking Work Done SyncWitBlocking End of Program

Similar to most programming languages, Go executes code in a synchronous manner, meaning it performs one operation at a time, in the sequence they are written. This basic tenet holds true for our example, where the syncWork() function represents a blocking task in our SyncWitBlocking() function, pausing its execution until the task completes.

- Asynchronous Blocking

package main

import (

"fmt"

"sync"

"time"

)

func asyncWork(wg *sync.WaitGroup) {

defer wg.Done()

time.Sleep(2 * time.Second) // Simulate work that takes time

fmt.Println("AsyncWitBlocking Work Done")

}

func AsyncWitBlocking() {

fmt.Println("AsyncWitBlocking Starting Work")

var wg sync.WaitGroup

wg.Add(1)

go asyncWork(&wg) // This operation is now asynchronous

wg.Wait() // But we still block here until doWork is done

fmt.Println("AsyncWitBlocking End of Program")

}

func main() {

AsyncWitBlocking()

}

Output:

AsyncWitBlocking Starting Work AsyncWitBlocking Work Done AsyncWitBlocking End of Program

In this scenario, asyncWork() is an asynchronous operation, as it is wrapped inside a goroutine which runs independently of the main function. However, due to the wg.Wait() call, it still presents a blocking scenario, since the main() function won’t proceed until asyncWork() has completed. This effectively makes it an asynchronous blocking operation.

Pre-emptive and Non pre-emptive

At the heart of multitasking and concurrent programming are preemption and non-preemption. The primary difference between the two lies in how tasks are managed and executed. Preemptive multitasking enables an operating system or runtime to interrupt a task midway, suspending its execution and allowing another task to take over. This kind of system keeps more tasks moving forward simultaneously, enhancing overall efficiency. However, it requires careful synchronization to prevent data corruption and inconsistency.

On the other hand, non-preemptive or cooperative multitasking necessitates that a task voluntarily give up control of the CPU for other tasks to execute. It might seem simpler, and it is less prone to data corruption as tasks can be interrupted only at specific points. However, if a task doesn’t yield control frequently, it can lead to potential bottlenecks.

Speaking of Go, the language follows an M:N scheduling model, where M goroutines are scheduled on N OS threads. Although the scheduler is preemptive, since it operates in user space, it doesn’t exactly work like OS-level preemption.

package main

import (

"fmt"

"time"

)

func main() {

go func() {

sum := 0

for i := 0; i < 1e10; i++ {

sum += i

}

fmt.Println(sum)

}()

// This function will eventually get a chance to execute, because

// the Go runtime will preempt the long-running goroutine above.

go func() {

time.Sleep(time.Second)

fmt.Println("Hello, World!")

}()

time.Sleep(5 * time.Second)

}

Output:

Hello, World! -5340232226128654848

In this example, the first goroutine could potentially hog the CPU time, but thanks to Go’s preemptive runtime, the second goroutine still gets a chance to run. This illustrates the preemptive nature of Go’s scheduler.