Introduction

In today’s tech-driven world, Kubernetes (or k8s) stands out as a key player in managing containerized applications. This robust container orchestration system empowers both developers and operations teams, simplifying the tasks of scaling, maintaining, and deploying applications. We’ll dive into the fascinating history of Kubernetes, examine the factors contributing to its widespread adoption, and identify situations where it truly shines.

The aim is to provide you with a solid understanding of Kubernetes’ importance and explain why it has emerged as the top choice for managing containerized applications.

Historical Background of the Emergence of Kubernetes

The journey of Kubernetes begins with an understanding of how application deployment has evolved over time. By examining the history of deployment strategies, we can appreciate the advantages and innovations that Kubernetes brings to the table.

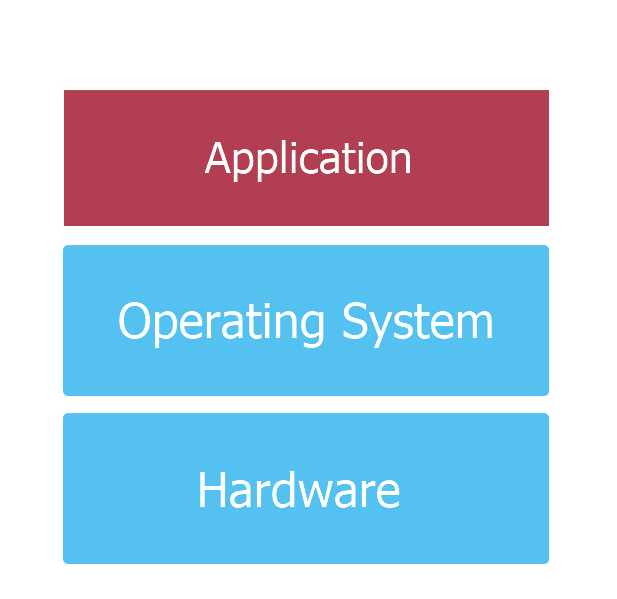

Traditional Deployment

Traditional deployment refers to the method of installing applications directly onto physical servers. In this approach, each application runs on a dedicated server, and resources like CPU, memory, and storage are allocated based on the needs of the application.

Problems of Traditional System

The traditional deployment model has several limitations, which have become more evident as software development and infrastructure demands have grown:

- Resource inefficiency: Dedicated servers often lead to underutilization of resources, as the applications might not consume all the allocated resources, resulting in wasted capacity.

- Scalability challenges: Scaling applications in this model can be time-consuming and expensive, as it typically involves procuring and setting up additional hardware.

- Difficulty in maintenance and updates: Managing and updating applications becomes increasingly complex, as each server must be individually maintained, patched, and monitored.

- Inflexible resource allocation: The inability to dynamically allocate or share resources between applications can lead to performance issues and bottlenecks.

These limitations led to the development of more efficient and flexible deployment strategies, paving the way for virtualized and containerized deployment models.

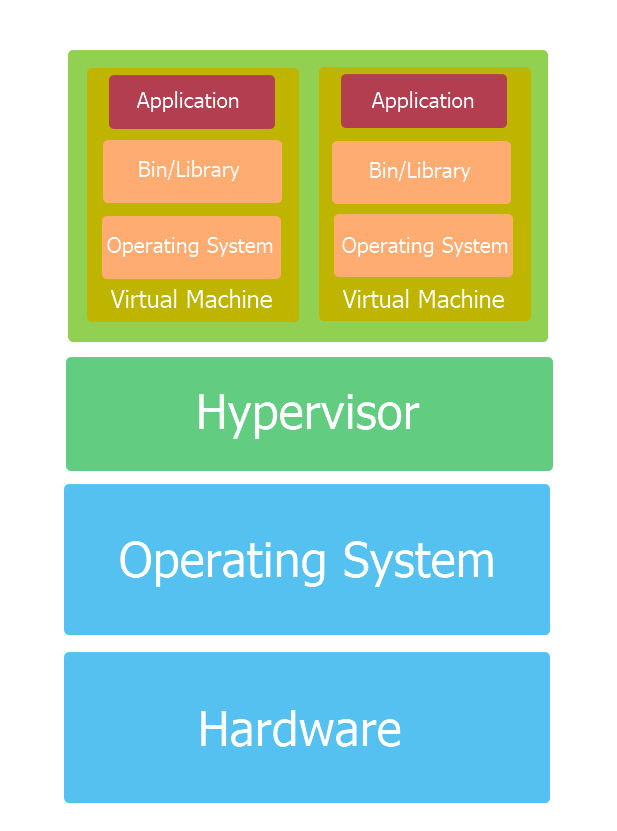

Virtualized Deployment

Virtualized deployment is an approach where multiple virtual machines (VMs) run on a single physical server using a technology called a hypervisor. The hypervisor is responsible for creating, managing, and allocating resources to each VM, which has its own operating system and runs applications independently from other VMs. This allows multiple applications to share the same physical hardware while still being isolated from each other.

What problems does It solve in Traditional Systems

Virtualized deployment addresses some of the issues associated with traditional deployment:

- Enhanced resource utilization: By running multiple VMs on a single physical server, resources such as CPU, memory, and storage can be more efficiently utilized, reducing hardware costs and waste.

- Improved isolation: Each VM runs in its own isolated environment, preventing applications from interfering with one another and providing a higher level of security.

- Easier scalability: Virtual machines can be easily added, removed, or resized to accommodate changing workloads without the need for additional physical hardware.

- Simplified maintenance and updates: Virtualized environments make it easier to manage and update applications, as changes can be made within individual VMs without affecting other applications running on the same server.

Problems of Virtualized Systems

Despite the benefits of virtualized deployment, there are still some challenges:

- Performance overhead: Virtual machines introduce a layer of overhead due to the need for each VM to run its own operating system, which can lead to reduced performance compared to running applications directly on physical hardware.

- Less efficient resource sharing: While virtualization improves resource utilization compared to traditional deployment, it still doesn’t allow for the same level of resource sharing as containerization, resulting in potential waste and inefficiencies.

- Slower startup times: VMs require booting up an entire operating system, which can result in longer startup times compared to containers.

These limitations paved the way for the development of container deployment, which sought to address the issues of both traditional and virtualized systems.

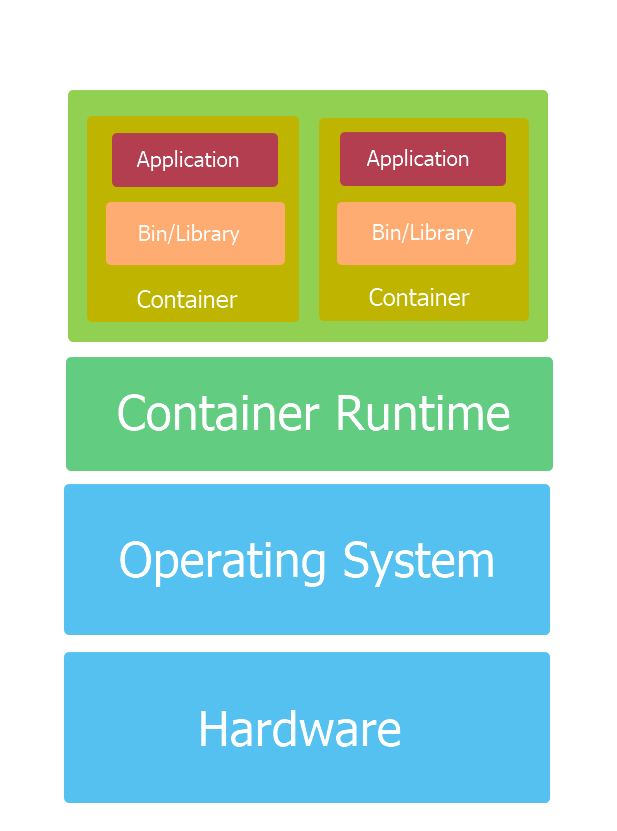

Container Deployment

Container deployment is a modern approach to application deployment where applications are packaged with their dependencies into isolated units called containers. Containers run on a shared operating system, allowing multiple containers to coexist on the same host without interference. This deployment model offers greater flexibility, resource efficiency, and portability compared to traditional and virtualized deployment strategies.

What problems does It solve in virtualized systems

Container deployment addresses several issues that were prevalent in virtualized systems:

- Improved resource utilization: Containers share the host operating system, reducing overhead and enabling more efficient use of system resources compared to virtual machines, which require a separate OS for each instance.

- Faster startup times: Since containers don’t need to boot up an entire OS like virtual machines, they start much faster, improving deployment and scaling speeds.

- Portability: Containers package applications and their dependencies together, ensuring consistent behavior across different environments, and making it easier to move applications between development, testing, and production environments.

- Simplified application management: Containers enable a microservices architecture, allowing applications to be broken down into smaller, modular components that can be developed, deployed, and scaled independently.

Although container deployment addressed many challenges of virtualized systems, it also introduced new complexities in managing and orchestrating containers, especially when dealing with large-scale deployments. This led to the emergence of Kubernetes as a powerful container orchestration platform to manage these complexities.

What is Container Orchestration and Kubernetes?

As container deployment gained popularity, the need for a system to efficiently manage and coordinate containers became crucial. This is where container orchestration comes into play.

Container Orchestration

Container orchestration is the automated process of deploying, scaling, networking, and managing containerized applications. It addresses the complexities of managing large-scale container deployments by automating tasks such as resource allocation, load balancing, scaling, and recovery. Container orchestration tools help developers and operations teams maintain control over container environments, ensuring optimal performance and reliability.

Challenges addressed by orchestration

Container orchestration solves several challenges that arise when dealing with containers:

- Managing large-scale deployments: Orchestrators simplify the process of deploying and managing numerous containers across multiple hosts.

- Handling failures and recovery: Orchestrators can automatically detect and recover from container failures, ensuring high availability and resilience.

- Load balancing and networking: Orchestrators handle networking between containers and distribute load evenly among them, optimizing performance and resource usage.

- Scaling and resource allocation: Orchestrators can dynamically scale containerized applications based on demand, ensuring efficient resource utilization and optimal performance.

Kubernetes

Kubernetes, often abbreviated as k8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Developed by Google and later donated to the Cloud Native Computing Foundation (CNCF), Kubernetes has become the de facto standard for container orchestration, providing a robust and extensible framework for managing containers at scale.

Comparison with other orchestration tools

While there are several container orchestration tools available, such as Docker Swarm and Apache Mesos, Kubernetes stands out due to its extensive feature set, strong community support, and wide adoption across the industry. Kubernetes offers advanced features like auto-scaling, rolling updates, self-healing, and custom resource definitions, which make it a powerful choice for managing complex containerized applications.

Why Kubernetes is preferred

Kubernetes has gained widespread popularity and is preferred over other orchestration tools for several reasons:

- Scalability: Kubernetes is designed to handle large-scale container deployments, making it an ideal choice for organizations with growing infrastructure needs.

- Extensibility: Kubernetes is highly modular and extensible, enabling users to customize and extend its functionality using plugins and add-ons.

- Active community: Kubernetes has a vibrant and active community of contributors and users, ensuring continuous improvement and support for the platform.

- Broad industry adoption: Many major cloud providers offer managed Kubernetes services, making it easier for organizations to adopt and integrate Kubernetes into their existing infrastructure.

When to use Kubernetes?

Consider using Kubernetes in situations where you have large-scale deployments, dynamic scaling requirements, complex networking and service discovery needs, or when working in hybrid or multi-cloud environments.

Scenarios or Use Cases for Kubernetes

- Microservices Architecture: In a microservices architecture, applications are divided into small, independent services that can be developed, deployed, and scaled independently. Kubernetes simplifies the management of these services, automating the deployment, scaling, and networking of containers, making it an excellent choice for managing microservices-based applications.

- CI/CD Pipelines: Kubernetes integrates well with Continuous Integration and Continuous Deployment (CI/CD) pipelines, streamlining the process of building, testing, and deploying applications. Its ability to manage rolling updates and support for canary deployments ensures minimal downtime and smooth transitions between application versions.

- Machine Learning and AI Workloads: Kubernetes can efficiently manage workloads related to machine learning and artificial intelligence, as it provides support for GPU resources and allows for dynamic scaling based on resource demand. This makes it an ideal choice for deploying and managing data processing, training, and inference tasks in the ML/AI domain.

- IoT and Edge Computing: In IoT and edge computing scenarios, applications need to run on diverse and distributed environments, often with constrained resources. Kubernetes’ lightweight nature and support for a variety of runtime environments make it well-suited for managing containerized applications in these contexts.

- Multi-tenant Applications: For organizations that provide multi-tenant applications or Software-as-a-Service (SaaS) platforms, Kubernetes offers features like namespaces and resource quotas to isolate and manage resources for individual tenants, ensuring fair allocation and preventing resource contention.

Factors to Consider When Deciding to Use Kubernetes

Before choosing to implement Kubernetes for your application, consider the following factors:

- Complexity: Kubernetes comes with a learning curve and added complexity compared to simpler container management solutions. Assess whether your application’s requirements justify the added complexity and if your team has the necessary skills or willingness to learn and maintain a Kubernetes-based infrastructure.

- Existing Infrastructure: Consider your current infrastructure and how well Kubernetes can integrate with it. Kubernetes works well with cloud-native applications but may require additional effort to integrate with legacy systems or non-containerized workloads.

- Cost: While Kubernetes itself is open-source, deploying and managing a Kubernetes cluster can incur additional costs, such as cloud provider charges, hardware expenses, and maintenance overhead. Evaluate whether the benefits of using Kubernetes outweigh these costs for your specific use case.

- Team Expertise: Kubernetes requires a certain level of expertise to manage effectively. Assess your team’s familiarity with containerization, Kubernetes concepts, and related technologies to determine if you have the necessary knowledge to successfully implement and maintain a Kubernetes-based infrastructure.

- Support and Community: Kubernetes has a strong community and ecosystem, which can be an advantage in terms of finding support, resources, and third-party integrations. However, it’s essential to consider the specific requirements of your application and whether the available support and integrations align with your needs.

By carefully evaluating these factors, you can make an informed decision about whether Kubernetes is the right choice for managing your containerized applications.

Conclusion

Kubernetes has emerged as a leading container orchestration platform due to its robust feature set, extensibility, and widespread adoption. By addressing the complexities of managing containerized applications, Kubernetes has become an indispensable tool for organizations embracing microservices, cloud-native development, and modern application architectures.

When deciding whether to use Kubernetes, it’s essential to consider factors such as the scale of your deployment, the complexity of your application, your team’s expertise, and the potential costs involved. Kubernetes is a powerful solution for managing large-scale, complex applications with dynamic scaling requirements, advanced networking needs, and strict reliability and resilience demands. However, for smaller-scale or simpler applications, alternative container management solutions may be more suitable and easier to manage.

By carefully evaluating your application’s requirements and the benefits Kubernetes offers, you can determine if Kubernetes is the right choice for your container orchestration needs, and harness its potential to build, deploy, and scale applications efficiently and effectively.