Introduction

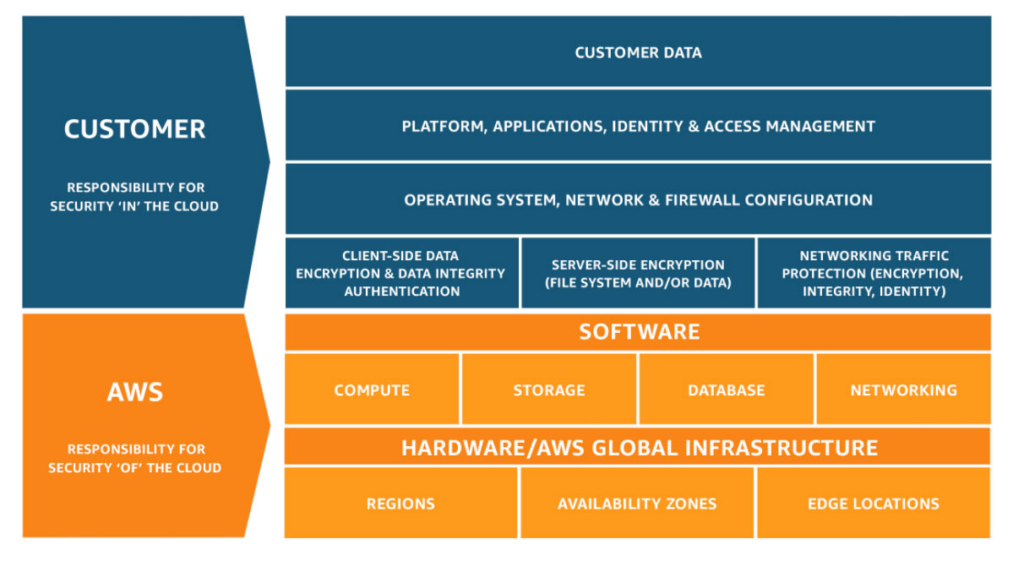

In today’s digital landscape, ensuring the security of your cloud infrastructure is paramount. When working with Amazon Web Services (AWS), it’s essential to follow best practices to protect your data and resources. The AWS shared responsibility model clearly states that while AWS manages the security of the cloud, customers are responsible for securing their data within it. This blog post aims to guide you through ten crucial best practices that will help you bolster your AWS infrastructure security.

10 AWS Best Practices for Security

Implement the principle of least privilege

One fundamental security practice to adopt is the principle of least privilege. This concept revolves around granting users and applications the minimum level of access they need to perform their tasks. By implementing this principle, you can reduce the risk of unauthorized access and limit the potential damage from compromised credentials.

To achieve this in AWS, you should take advantage of Identity and Access Management (IAM) policies, roles, and groups. Create specific policies that grant the necessary permissions for each user or application, and attach them to corresponding IAM roles or groups. Avoid using overly broad policies, and regularly review your IAM configurations to ensure they remain up-to-date with your evolving infrastructure.

To handle this, An excellent tool to help you generate the least privilege policies is Policy Sentry. It’s policy_sentry

This CLI-based IAM Least Privilege Policy Generator allows users to create fine-grained IAM policies in a matter of seconds, eliminating the need to comb through the AWS IAM documentation manually. Policy Sentry streamlines the process of crafting secure IAM policies and ensures that your policies are scoped down according to access levels and resources. By using Policy Sentry, you can maintain high-security standards while meeting project deadlines, without compromising on the principle of least privilege.

Regularly review and rotate IAM access keys and passwords

Managing and rotating your IAM credentials is an essential aspect of maintaining a secure AWS environment. Regularly reviewing and rotating access keys and passwords can help prevent unauthorized access and reduce the risk of compromised credentials. Best practices for managing IAM access keys and passwords include:

- Set up a regular schedule to review and rotate IAM access keys and passwords. This ensures that credentials are up-to-date and minimizes the risk of unauthorized access due to compromised keys or passwords.

- Use IAM policies to enforce password complexity requirements, such as minimum length, the use of symbols, and a mix of uppercase and lowercase letters. This helps prevent the use of weak passwords that are more susceptible to brute-force attacks.

- Implement AWS Secrets Manager to securely store and manage access keys, passwords, and other secrets. Secrets Manager can automatically rotate secrets, such as database credentials, without any manual intervention or downtime.

- Monitor and analyze AWS CloudTrail logs to detect the usage of outdated or unused IAM access keys and passwords. Promptly revoke access for any unused or compromised credentials to mitigate the risk of unauthorized access.

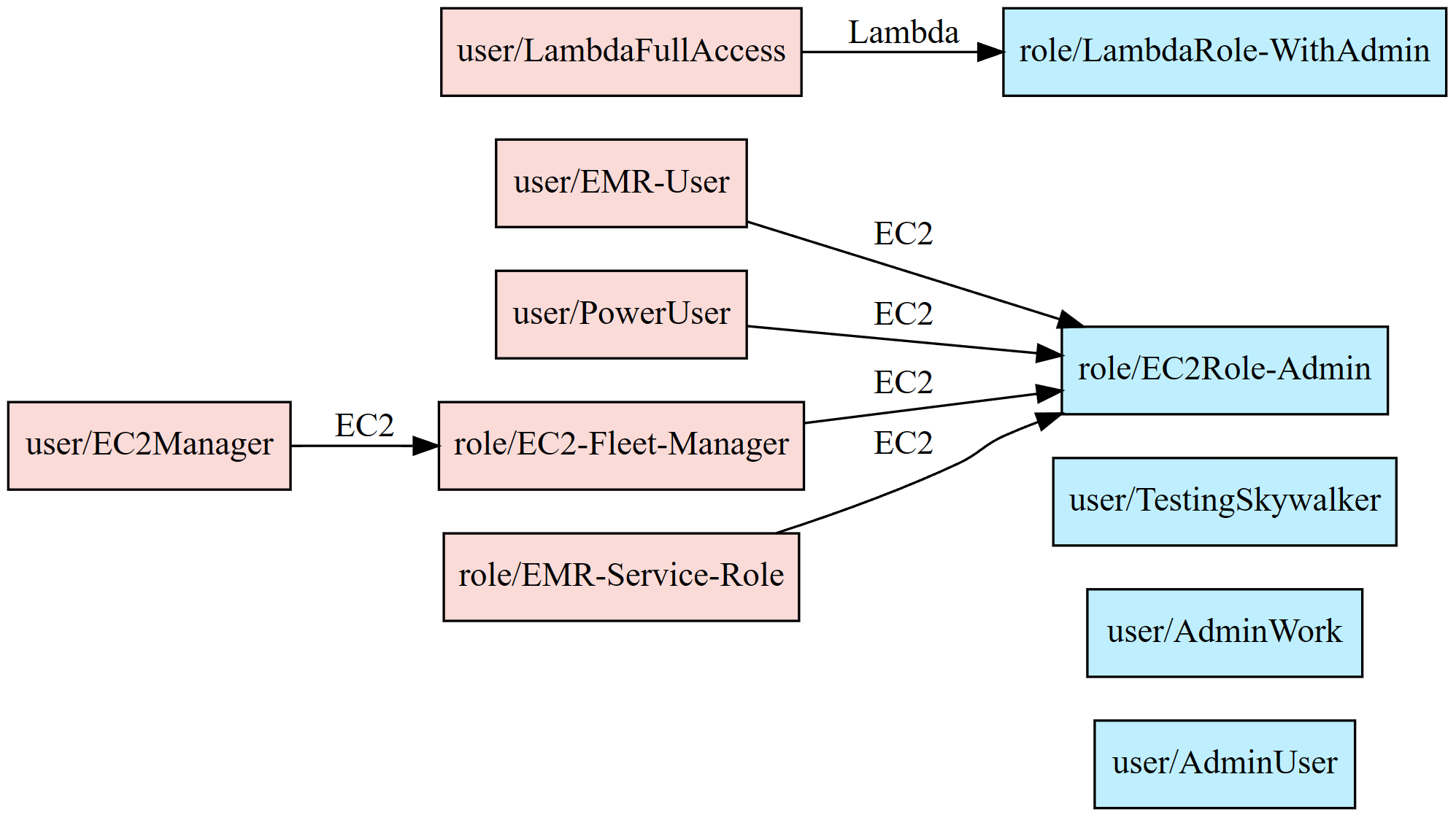

- You may utilize the Principal Mapper (PMapper) tool, an open-source script and library for identifying risks in the configuration of AWS Identity and Access Management (IAM) for an AWS account or organization. PMapper models IAM users and roles as a directed graph, enabling checks for privilege escalation and alternate paths an attacker could take to gain access to a resource or action in AWS.

By regularly reviewing and rotating IAM access keys and passwords, you can help protect your AWS environment from unauthorized access and potential security incidents.

Enable AWS multi-factor authentication (MFA)

Another critical step in securing your AWS infrastructure is enabling multi-factor authentication (MFA) for your AWS root account and IAM users. MFA adds an extra layer of protection by requiring users to provide two or more forms of identification before they can access AWS resources. This means that even if a user’s password or access keys are compromised, an attacker would still need to pass the MFA challenge to gain access.

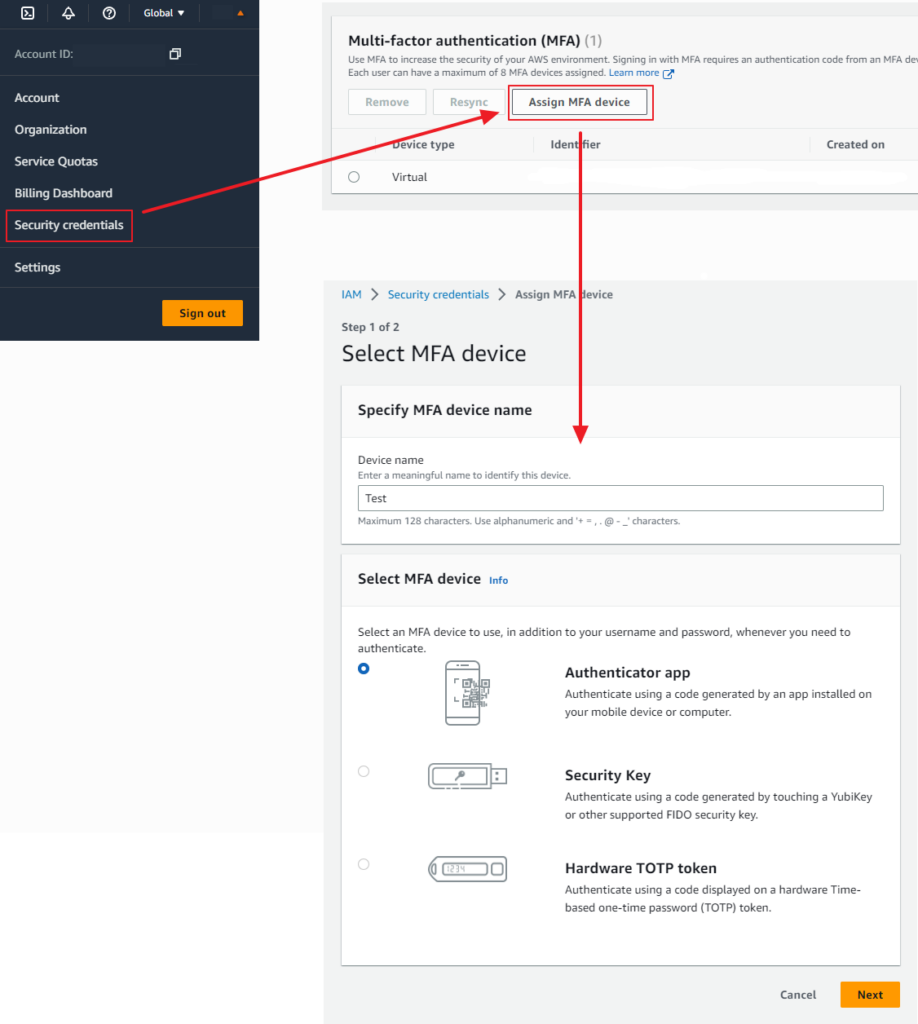

To enable MFA for your AWS root account, follow these steps:

- Sign in to the AWS Management Console with your root account credentials.

- Navigate to the ‘Security credentials’ page under your account settings at the top right of the console page.

- In the ‘Multi-Factor Authentication (MFA)’ section, click on ‘Assign MFA device.’

- Follow the on-screen instructions to set up your preferred MFA device, such as a virtual MFA application or a hardware MFA device.

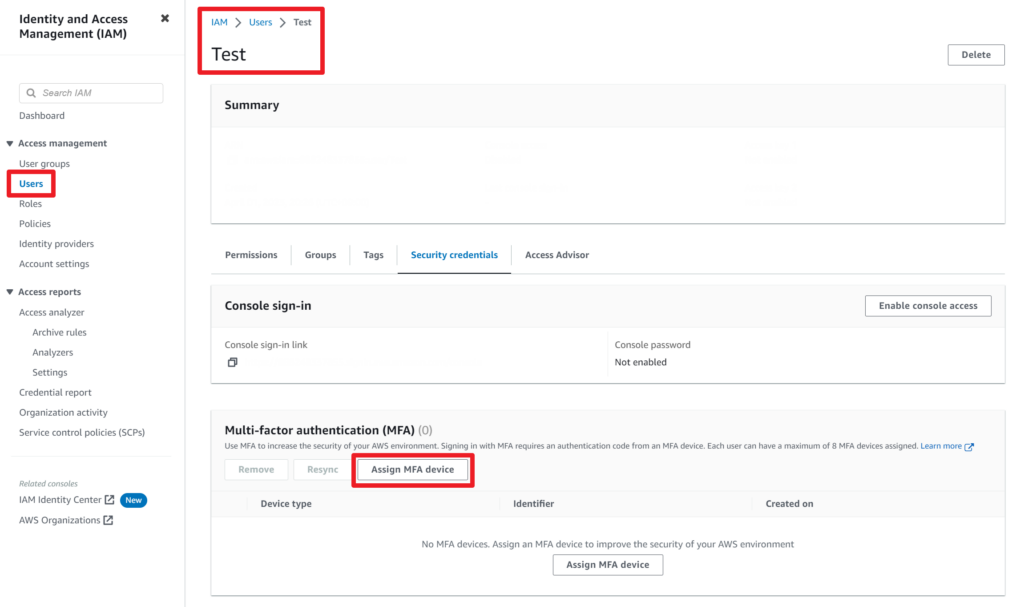

To enable MFA for IAM users, follow these steps:

- Sign in to the AWS Management Console with an IAM user that has the necessary permissions to manage other IAM users.

- Navigate to the ‘IAM’ service and select the ‘Users’ tab.

- Click on the IAM user for which you want to enable MFA.

- In the ‘User details’ page, click on the ‘Security credentials’ tab.

- Locate the ‘Multi-factor authentication (MFA)’ section and click on ‘Assign MFA device’.

- Follow the on-screen instructions to set up an MFA device for the selected IAM user.

Enabling MFA for your AWS accounts significantly enhances your security posture by adding an additional layer of protection against unauthorized access.

Use AWS Organizations to manage multiple accounts

If you manage multiple AWS accounts, leveraging AWS Organizations can be a powerful way to streamline the management of your accounts and enhance security. AWS Organizations allows you to consolidate multiple AWS accounts into a single organization, enabling you to create a hierarchical structure with consolidated billing, centralized management of security policies, and resource sharing across accounts.

Using AWS Organizations comes with several benefits:

- Simplified billing: Consolidate your AWS accounts’ billing information into a single payer account, making it easier to monitor and manage your expenses.

- Centralized policy management: Apply organization-wide policies to control access to AWS services and resources across all accounts in your organization.

- Resource sharing: Use AWS Resource Access Manager (RAM) to share resources such as VPCs, subnets, and transit gateways across accounts within your organization.

Setting up AWS Organizations is particularly useful if you have multiple AWS accounts, but it’s not mandatory for users with a single account. If you have only one account, you can still follow the other best practices in this guide to improve your AWS infrastructure security.

To create an organization, follow these steps:

- Sign in to the AWS Management Console with your AWS root account or an IAM user with administrator permissions.

- Navigate to the ‘AWS Organizations’ service and click on ‘Create organization.’

- Follow the on-screen instructions to set up your organization, invite existing AWS accounts, or create new accounts.

Once you’ve set up your organization, you can start creating organizational units (OUs) to group your accounts and apply service control policies (SCPs) to define the allowed and denied actions for those accounts.

Also, You could utilize org-formation-cli for your AWS Organizations setup and management

org-formation-cli is IaC tool for AWS Organizations. It could save much time to set up and manage AWS Organization resources as code. The automation that the tool provides reduces tedious manual steps which potentially make human error.

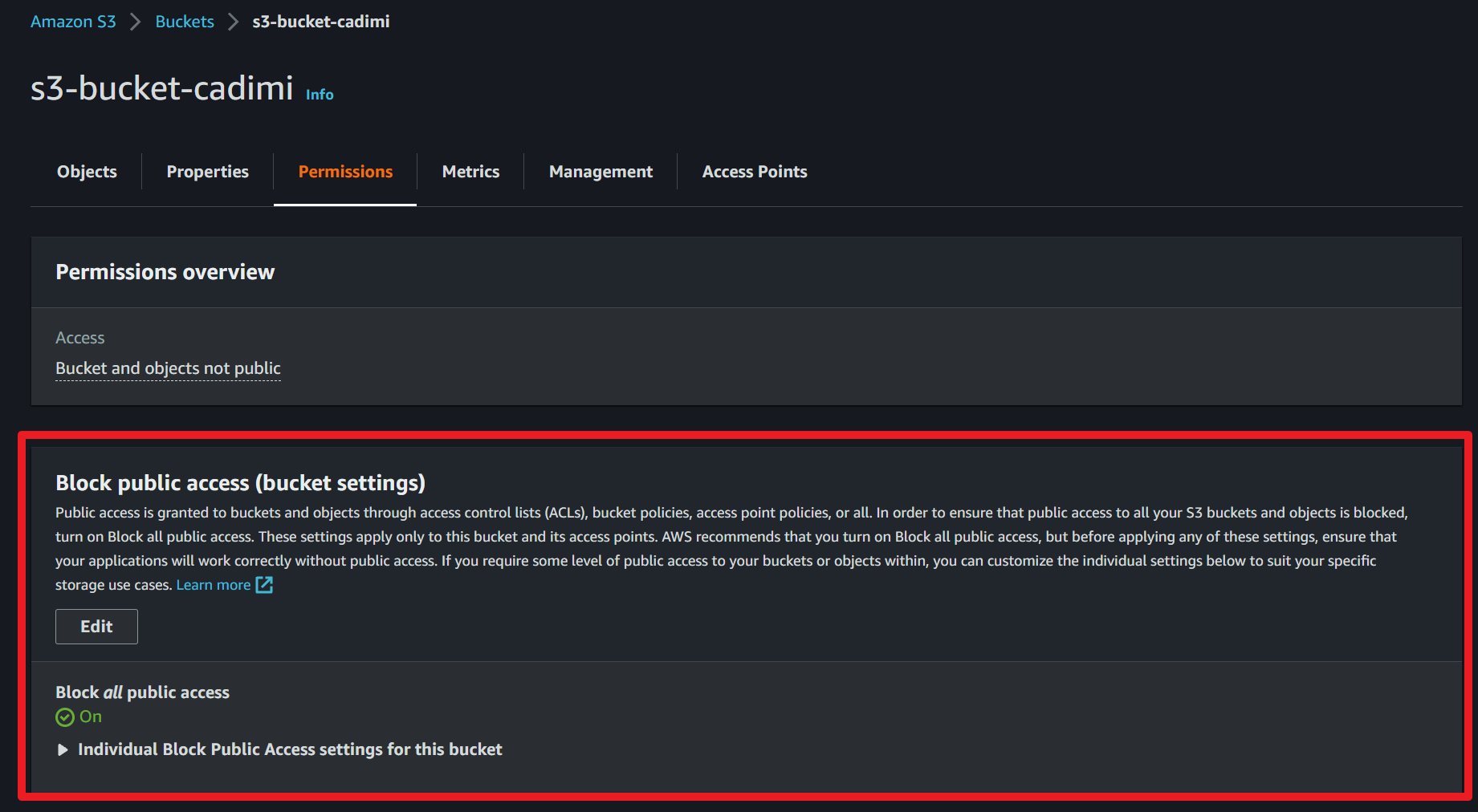

Secure your Amazon S3 buckets

Securing your Amazon S3 buckets is vital to prevent unauthorized access and potential data breaches. By implementing proper access controls, bucket policies, and encryption, you can safeguard your data stored in S3.

Follow these best practices to secure your Amazon S3 buckets:

- Restrict public access: Ensure that your S3 buckets do not have public read or write permissions. Use the S3 Block Public Access feature to block public access at the bucket level or for your entire AWS account.

- Implement bucket policies: Create bucket policies to control access to your S3 buckets. Define rules based on IAM user or role, IP address, or specific actions (such as uploading or deleting objects). Be cautious when using wildcards (*) in bucket policies to avoid accidentally granting overly permissive access.

- Use access control lists (ACLs): While bucket policies are generally preferred, you can also use access control lists (ACLs) to manage permissions on individual objects within a bucket. However, be mindful that managing ACLs can become complex as your number of objects grows.

- Enable default encryption: Ensure that your data is encrypted at rest by enabling default encryption for your S3 buckets. This will automatically encrypt all new objects uploaded to the bucket. You can choose between server-side encryption with Amazon S3-managed keys (SSE-S3), server-side encryption with AWS Key Management Service (KMS) keys (SSE-KMS), or client-side encryption.

- Encrypt existing data: For data already stored in your S3 buckets, you can use the S3 Copy operation to create encrypted copies of your objects, or you can use AWS S3 Batch Operations to automate the encryption process for multiple objects.

- Enable object versioning and lifecycle policies: Enable object versioning to keep multiple versions of an object in your bucket, providing protection against accidental deletion or overwrite. Additionally, use lifecycle policies to automatically transition old object versions to lower-cost storage classes or delete them when they are no longer needed.

- Force SSL on Amazon S3 for static web content hosting: If you use an S3 bucket to host static web content, it is essential to enforce SSL/TLS encryption for data in transit. There are two ways to achieve this.

First, you can use Amazon CloudFront in conjunction with your S3 bucket. Create a distribution that forces HTTPS connections, ensuring that your website’s visitors access your content securely. To set this up, create a CloudFront distribution for your S3 bucket and configure the “Viewer Protocol Policy” to “Redirect HTTP to HTTPS” or “HTTPS Only” in the distribution settings.

Second, you can add a bucket policy that denies access to your S3 bucket if the request is not made over HTTPS. Below is an example of such a policy. Replace <arn:aws:s3:::bucket_name/*> with the Amazon Resource Name (ARN) of your S3 bucket. This policy will effectively block any non-HTTPS requests to your S3 bucket.

{

"Statement":[

{

"Action": "s3:*",

"Effect":"Deny",

"Principal": "*",

"Resource":"<arn:aws:s3:::bucket_name/*>",

"Condition":{

"Bool":

{ "aws:SecureTransport": false }

}

}

]

}

By implementing these best practices, you can significantly enhance the security of your Amazon S3 buckets and protect your valuable data from unauthorized access.

Utilize AWS Security Groups and Network Access Control Lists (NACLs)

Using AWS Security Groups and Network Access Control Lists (NACLs) allows you to control inbound and outbound traffic in your AWS environment effectively. These security mechanisms act as virtual firewalls to secure your Amazon EC2 instances, Amazon RDS instances, and other resources within your VPC.

- Security Groups: Security Groups are stateful firewalls that control traffic at the instance level. They can be associated with multiple instances, allowing you to apply consistent security rules across your environment. Best practices for Security Groups include:

- Create separate Security Groups for different resource types and roles, such as web servers, database servers, and application servers.

- Use the principle of least privilege by only allowing the necessary traffic to and from your instances.

- Regularly review and update your Security Groups to ensure they reflect the current requirements of your environment.

- Network Access Control Lists (NACLs): NACLs are stateless firewalls that control traffic at the subnet level within your VPC. They provide an additional layer of security, complementing Security Groups. Best practices for NACLs include:

- Use NACLs as a secondary defense mechanism, in addition to Security Groups, to add an extra layer of protection.

- Apply the principle of least privilege by only allowing the necessary traffic to and from your subnets.

- Regularly review and update your NACLs to ensure they reflect the current requirements of your environment.

By implementing both Security Groups and NACLs, you can create a robust security architecture that effectively controls traffic flow and reduces the risk of unauthorized access in your AWS environment.

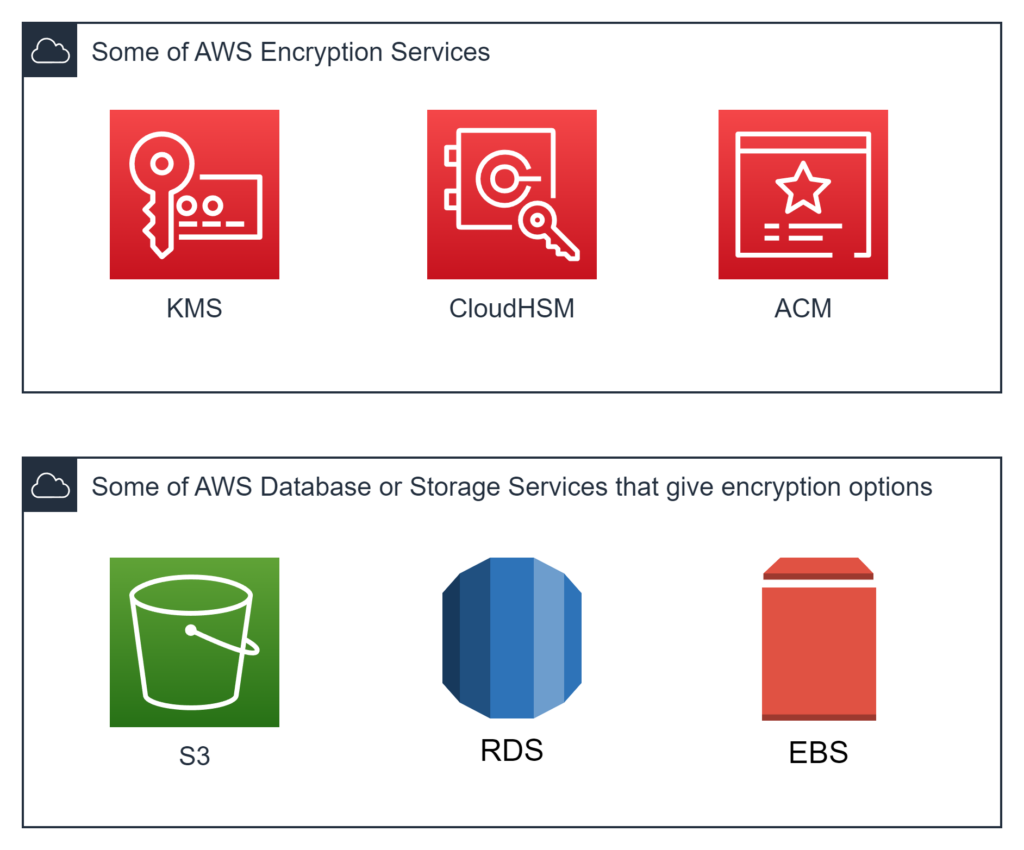

Encrypt data at rest and in transit

Encryption is a critical aspect of securing your data in AWS, both when it is stored (at rest) and when it is being transmitted between services or users (in transit). Implementing encryption best practices can help you protect sensitive information from unauthorized access and data breaches.

- Encrypt data at rest: AWS offers various encryption options for data stored in its services, such as Amazon S3, Amazon RDS, Amazon EBS, Amazon DynamoDB. Use service-specific encryption features, such as server-side encryption with Amazon S3-managed keys (SSE-S3), server-side encryption with AWS KMS keys (SSE-KMS), client-side encryption, or hardware-based encryption with AWS CloudHSM.

- Encrypt data in transit: To secure data during transmission, use SSL/TLS encryption when accessing AWS services or when transmitting data between your services and users. For example:

- Use HTTPS when connecting to your Amazon S3 bucket, Amazon API Gateway, or when accessing your application through an Application Load Balancer (ALB) or Amazon CloudFront.

- Utilize AWS Certificate Manager (ACM) to manage your SSL/TLS certificates, making it easier to deploy and renew certificates across your AWS resources.

- Set up a VPN or AWS Direct Connect for secure communication between your on-premises network and your AWS environment.

By implementing encryption both at rest and in transit, you can significantly enhance the security of your data and reduce the risk of unauthorized access and potential data breaches.

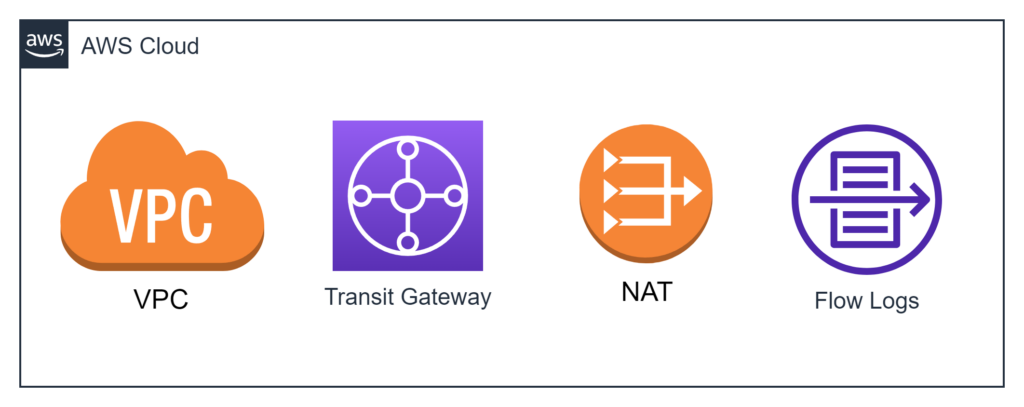

Use Amazon VPC to isolate your resources

Amazon Virtual Private Cloud (VPC) enables you to create a private, isolated section of the AWS Cloud, providing a secure and scalable environment to host your resources. By effectively utilizing Amazon VPC, you can enhance the security posture of your AWS infrastructure.

- VPC Design: Organize your VPCs according to your organizational needs and security requirements. You can create separate VPCs for different environments (e.g., development, staging, production) or business units. Use VPC peering or AWS Transit Gateway to establish secure communication between VPCs if needed.

- VPC Flow Logs: Enable VPC Flow Logs to monitor and capture IP traffic information for your VPC, subnets, and network interfaces. Use this data to analyze traffic patterns, troubleshoot connectivity issues, and identify potential security threats.

- Subnet Segmentation: Divide your VPC into multiple subnets, segregating resources based on their roles and security levels. For example, separate your public-facing web servers from your private database servers by placing them in different subnets.

- Network Security: Use Security Groups and Network Access Control Lists (NACLs) to control inbound and outbound traffic within your VPC, applying the principle of least privilege to minimize potential attack vectors.

- NAT Gateways and Bastion Hosts: Implement NAT gateways to allow outbound internet access for instances in your private subnets, while preventing inbound traffic. Use bastion hosts to securely access your private instances for management purposes.

By leveraging Amazon VPC and its features, you can create a well-structured and secure network environment for your AWS resources.

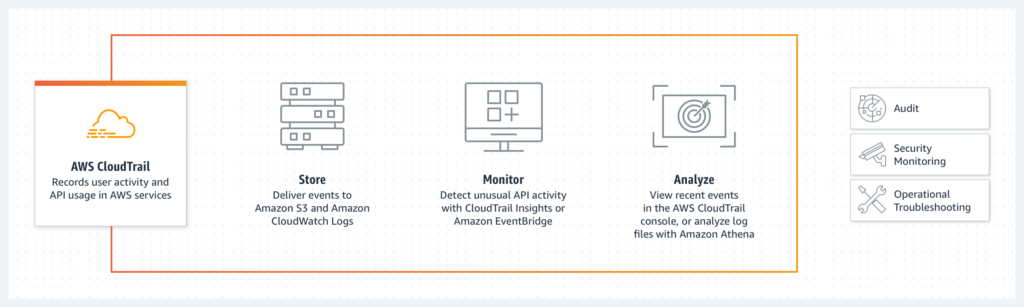

Consider AWS CloudTrail and Amazon GuardDuty

Monitoring and auditing your AWS environment are essential for maintaining security and identifying potential threats. AWS CloudTrail and Amazon GuardDuty are powerful services that can help you achieve these goals. While these services might sometimes be a bit costly, the value they provide in terms of security and visibility makes the investment worthwhile.

1. AWS CloudTrail: CloudTrail is a service that records AWS API calls for your account, providing a detailed audit trail of your AWS infrastructure’s activity. AWS CloudTrail Lake is an advanced feature that allows you to run SQL-based queries on your events and supports storing and querying events from multiple sources, including AWS Config, Audit Manager, and external events. This feature enhances your ability to search, query, and analyze logged data. Best practices for using AWS CloudTrail and CloudTrail Lake include:

- Enable CloudTrail in all AWS regions to ensure comprehensive coverage of your account activity.

- Configure CloudTrail to deliver log files to a secure Amazon S3 bucket, and enable S3 bucket versioning and server-side encryption to protect your log files.

- Monitor and analyze CloudTrail logs and CloudTrail Lake events using Amazon CloudWatch Logs, AWS Lambda, or third-party tools to identify potential security incidents or unauthorized activity.

- Understand the costs associated with CloudTrail Lake, such as AWS KMS costs for encryption and decryption, as well as CloudTrail charges for event data stores and queries.

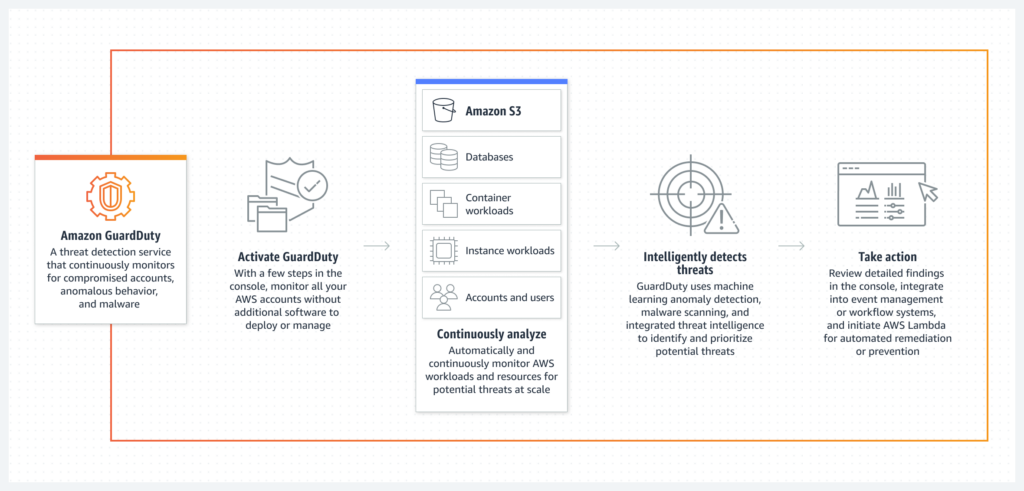

2. Amazon GuardDuty: GuardDuty is a managed threat detection service that analyzes AWS logs (including CloudTrail logs) and other data sources to identify unusual or unauthorized behavior. Best practices for using Amazon GuardDuty include:

- Enable GuardDuty in all AWS regions to ensure comprehensive threat detection coverage.

- Integrate GuardDuty findings with Amazon SNS to receive real-time alerts on potential security issues.

- Review and respond to GuardDuty findings promptly, using AWS Lambda or third-party tools to automate response actions where possible.

By implementing AWS CloudTrail, CloudTrail Lake, and Amazon GuardDuty, you can gain visibility into your AWS environment, detect potential threats, and respond effectively to security incidents.

Continuously monitor and audit your environment

Maintaining a secure AWS environment requires continuous monitoring and auditing of your infrastructure. AWS provides various native services, such as Amazon CloudWatch and AWS Config, to help you monitor your environment and ensure compliance with your security policies. Additionally, third-party tools like Trivy and Checkov can be used for pre-deployment audits of Infrastructure as Code (IaC) templates.

- Amazon CloudWatch: This service enables you to collect, analyze, and visualize metrics, logs, and events from your AWS resources. CloudWatch can help you detect anomalies, set alarms, and automate actions based on predefined thresholds or patterns.

- AWS Config: This service continuously records and evaluates your AWS resource configurations, allowing you to assess, audit, and evaluate the configurations of your resources. It helps ensure compliance with your organization’s policies and industry best practices.

Also, when using IaC to create and manage your AWS infrastructure, it is highly recommended to verify the security and compliance of your IaC templates before deployment. Trivy and Checkov are two popular pre-deployment audit tools for IaC:

- Trivy: An open-source vulnerability scanner that detects security issues in container images and IaC templates. Trivy supports scanning for a variety of IaC formats, such as Terraform, CloudFormation, and Kubernetes manifests. It helps identify misconfigurations and vulnerabilities in your IaC templates before deployment, reducing the risk of security incidents.

- Checkov: A static code analysis tool for IaC that scans your templates for security and compliance issues. Checkov supports various IaC formats, including Terraform, CloudFormation, Kubernetes, and Azure Resource Manager templates. It comes with a wide range of built-in policies and can be extended with custom policies, ensuring that your IaC templates comply with best practices and organizational requirements.

By continuously monitoring and auditing your AWS environment with native services like CloudWatch and AWS Config, and using pre-deployment audit tools like Trivy and Checkov for IaC, you can proactively identify and remediate security issues, maintain compliance, and reduce the risk of security incidents.

Conclusion

In conclusion, the security best practices outlined in this post serve as a starting point for protecting your AWS environment. However, it is essential to recognize that these practices are only a part of a comprehensive security strategy. To ensure the highest level of security, you should continuously assess your infrastructure, stay up-to-date with the latest security recommendations, and adopt a proactive approach.

Leveraging appropriate third-party tools can sometimes provide similar or even better security capabilities at a lower cost compared to native AWS services. Therefore, it’s important to research and explore various tools to find the ones that best suit your specific needs and requirements.

Thank you for reading this post, and I hope it serves as a helpful guide on your journey to securing your AWS environment. Remember, security is an ongoing process that requires diligence, expertise, and adaptability. Stay safe, and happy securing!